In honor of Earth Day, Harper Voyager authors are sharing their scientific knowledge in the form of the virtual science fair—follow the conversation on Twitter at #HVsciencefair.

Artificial intelligence has long been a staple of science fiction, but now we’re at the turning point where it’s quickly becoming our reality. Marvin, the mopey robot from The Hitchhiker’s Guide to the Galaxy, was probably once considered by readers to be a laugh-fetching gag, but now it’s prudent to ask how modeling such emotions can affect how AI is perceived. Johnny Cabs and Rosie the Robots are practically on our doorsteps, but what will their presence mean for our economy? Certainly, some human job loss will occur, but will having AI in the workforce be a boon to other entrepreneurs and creators?

Authors Marina J. Lostetter (Noumenon) and Nicky Drayden (The Prey of Gods) set out to answer these questions and more. Both of their novels feature sentient AIs—Lostetter’s a super intelligent interstellar convoy charged with the transport and care of a volatile crew, and Drayden’s a secretly sentient personal robot whose misguided antics might spark a liberation movement. In an attempt to separate science from science fiction, these two AI fanatics gathered an engineer, a hacker, and a futurist to pick their brains about the minds of tomorrow.

Felix Yuan (twitter) is a generalist engineer who’s worked at Slide, Google, YouTube, and Sosh. He currently works at Ozlo, a company developing a state-of-the-art, conversational AI to power the future of search. In his spare time he writes sci-fi/urban-fantasy involving magic mollusks.

Ross Goodwin (website | twitter) is an artist, creative technologist, hacker, gonzo data scientist, and former White House ghostwriter. He employs machine learning, natural language processing, and other computational tools to realize new forms and interfaces for written language. His projects—from word.camera, a camera that expressively narrates photographs in real time using artificial neural networks, to Sunspring (with Oscar Sharp, starring Thomas Middleditch), the world’s first film created from an AI-written screenplay—have earned international acclaim.

Martin Ford (website | twitter) is a futurist and the author of two books: The New York Times bestselling Rise of the Robots: Technology and the Threat of a Jobless Future and The Lights in the Tunnel: Automation, Accelerating Technology and the Economy of the Future, as well as the founder of a Silicon Valley-based software development firm. Martin is a frequent keynote speaker on the subject of accelerating progress in robotics and artificial intelligence—and what these advances mean for the economy, job market and society of the future.

Which fictional representation of artificial intelligence is your favorite and why?

FELIX: I’d have to say GLaDOS is my favorite. She’s a villainous AI in the same vein as HAL 9000 but with more sass and an even bigger murderous streak.

ROSS: Probably Samantha from Her by Spike Jonze, but only because it’s such an empathetic portrayal. I do not believe the film portrayed an entirely realistic picture of the near future. For example, it’s almost certain that the greeting cards at Beautifulhandwrittenletters.com would be written by machines.

MARTIN: I like the Matrix movies, both because they represent a very interesting and sophisticated conception of AI and because I think they could be quite relevant to the future. AI combined with virtual reality is likely to be extraordinarily additive (perhaps nearly like a drug). I think we may see a lot of people spending a great deal of time in virtual worlds in the future—perhaps to the point that it could become a problem for society.

What are some common misconceptions about the current state of Artificial Intelligence?

ROSS: That AI should and will be human shaped, and that it will destroy us all. These are both wonderful fictional elements that have made many stories about AI the ones we know and love today, but they’re not healthy slices of reality. Real design requires real thought and consideration. (For example, ask yourself the following question: which robot makes more sense in real life: C3PO or R2D2? The answer should be obvious.)

MARTIN: Many people both under- and overestimate where we are regarding AI. They tend to underestimate the potential for specialized AI to have enormous impacts on society—in terms of white-collar jobs being automated, as well as in areas like privacy and so forth. On the other hand, many people overestimate how close we are to the true human-level AI you see in science fiction movies.

FELIX: I think the biggest misconception of AI is what AI actually is. I think there’s a grand expectation that AI is going to manifest itself as some sort of high level intelligence that is, if not human-like, will compete at the level of humanity or surpass it. The reality is that AI is already among us in way more mundane ways from playing ancient board games all the way to driving cars. It’s much more limited than the public perception of it, which makes it all the more fascinating.

Do you think it’s important that AIs model human emotions? Do you think such modeling would realistically improve or hamper AI-human interactions in the long run?

ROSS: For therapeutic purposes—PTSD treatment, and many other similar use cases. For the most part, I do not believe it is an important component for general AI in the everyday lives of healthy people who can interact with other humans regularly.

Strangely, the only other use case that comes to mind is entertainment, and that’s an important one. We expect good entertainment to trigger our emotions in certain ways, and AI that can achieve such responses would be enormously valuable across the various media of mainstream entertainment.

I believe that whether such an AI would improve or hamper AI-human (or even human-human) interactions in the long run remains an open question that largely depends on how our societal entertainment addiction progresses in the decades to come.

MARTIN: I don’t think it is essential that AI have emotions—objective intelligence will work just fine in most cases. However, it will surely happen as there is already progress in this area. Algorithms can already recognize emotional reactions by people, for example. This will have both positives and negatives depending on how it’s used. On the plus side, algorithms might help with psychology, counseling, etc. On the negative side, emotional AIs will be used to influence consumer choices and even to outright deceive people….

If AI is capable of deception, how will humans be expected to trust it? Is it fair to hold AI to a higher standard of ethical behavior than we expect from ourselves?

FELIX: This question presupposes that AI will have to be human-like. The main reason that a human would tell a lie is that they realize that the truth is less conducive to getting what they want. An AI, though? An AI would have to be designed to want something in the first place.

Now a further wrinkle in this is that even if an AI could lie, we would still be able to tell if it were. AIs are built by engineers after all, and engineers like having visibility into why their creation is doing something. This would mean building logging and toolsets that allow them to see exactly what their AI is “thinking.”

However this should not stop us from holding AI to a higher standard. Just because AI is capable of driving cars doesn’t mean they should have the same crash rate as us. We created AI to do certain things better than we could after all.

ROSS: Why would anyone design an AI capable of deception for everyday use? Except for a movie or a game, or some sort of entertainment application, I don’t really see the point.

A research group at Google wrote a comprehensive paper on the current realistic dangers of AI, including the danger that AI might deceive us in certain respects.

Needless to say, active precautions are taken in current research to avoid behaviors that might be detrimental to human safety, and various forms of deception are being studied in relation to AI systems theoretically capable of such an act.

But it’s important to note that the human quality of deception does not necessarily apply to AI, because AI is not human. As Dijkstra said, asking whether a machine can think is equivalent to asking whether a submarine can swim. I’d say asking whether a machine can “deceive” should be held to the same semantic test. AI behavior that, in certain cases, may look like deception to a human will often be far simpler and more innocent behavior than we might imagine.

MARTIN: The trust issue will definitely be important and there are no clear answers. The problem is that AI will be used by cyber-criminals and the like. Yes, it is reasonable to have even higher standards for AI—but not clear how they will be enforced.

Do AI researchers look to other academic disciplines such as the social sciences to bolster their understanding of how AI will fit into society and be perceived by humans?

FELIX: Yes! One such discipline is linguistics. In order to understand a person’s speech, an AI has to understand how to break down language into something it can semantically understand.

Of course there are always points where researchers should have leaned a bit harder at social sciences. An AI handing down jail sentences in Florida is disproportionately sentencing black men to longer prison sentences than white men. Had the creators of this AI done better research into social phenomena surrounding our court system and its history involving conviction and sentencing, then they might have created a model of the world that better captured the nuances of the world rather than exacerbating its problems.

ROSS: Absolutely. AI exists at the intersection of pretty much every academic discipline taught at a major university. For AI researchers to consider themselves merely computer scientists would be highly unproductive for everyone.

Despite being an AI expert, I do not actually hold a degree in computer science—I’m a self-taught programmer. My bachelor’s degree from MIT is in economics, and my graduate degree is from NYU ITP, which is like art school for engineers or engineering school for artists.

Needless to say, in my independent research career, I’ve learned to work between disciplines. The tools of non-CS disciplines I’ve used range from those of sociology and statistics to those of design and communication. I actually believe that AI, at this point, is as much (if not more) a design challenge as a computational one.

We hear a lot about how AI will erase jobs, but what will cheap AI labor mean for entrepreneurs and creators?

MARTIN: It may be easier to start a small business than ever before because you will have access to very powerful tools. To some extent this is already true—but unfortunately the economic data does not show that the economy is becoming more dynamic as a result. There are also issues with a few big companies dominating markets. Also, if AI results in significant job losses in the future that will undermine the market for entrepreneurs. Who will they sell their products to?

FELIX: It’ll likely mean hiring more sophisticated work-forces for cheaper. For example, Uber currently views self-driving cars as an existential threat to its business, and is currently rushing to be first to market with a fleet of these things. If it is, then it will likely place Uber in a position to take over any human powered driving service on the planet.

At what point does robotic ingenuity and creativity become art? Do you think we can ever sincerely describe a novel or a painting done by an AI as self-expression?

FELIX: Now this is a particularly interesting question, and perhaps it goes to the foundational question of “What is art?” and “Is self-expression necessary to create it?” I think Go players would argue that Deep Mind is already creating art with its perfect, unprecedented play.

But can we call it self-expression? I don’t know. Does self-expression require a self? If so, then we’ll need to define the requirements of what “self” actually is before we get to answering this question. It may be a long time though. That’s a question even the philosophers haven’t managed to answer.

ROSS: Advanced chess, also known as centaur chess, is a chess variant where both players have access to a powerful chess computer. Games played exhibit extremely complex tactics, unseen in games between humans or between computers alone. What can this tell us about how we should be making art?

MARTIN: This is a philosophical question. Some people might say it can’t be “art” if it is not created by a human. On the other hand there are already original paintings and symphonies generated by algorithms. If you can’t tell it was produced by a machine, and if it creates an emotional reaction in people, then I would say that qualifies as original art.

What do you think the most interesting or unusual application of AI in the near future might be?

MARTIN: I think the two areas with perhaps the most potential are health care and education. These sectors are imposing very high costs on us all—so if we can figure out how to use AI to truly transform them, there will be huge benefits to society.

FELIX: This is hard. AI is already far more ubiquitous than we generally think it is. It’s already capable of handing down sentences, finding food and shopping, driving cars, parsing legal documents, playing Go, stacking blocks, and identifying faces.

I think the truly interesting AIs will be the ones capable of generative tasks. This means doing things they weren’t designed for, but using their knowledge of the world to derive new tasks to fulfill. But then this is what humans do. Perhaps what I’m saying is that, after all my stipulations and caveats, the most interesting AIs will be the human-like ones.

ROSS: Literally anything anyone can imagine.

AI is a term onto which we project our hopes, desires, and fears in the aggregate. It reflects us not as individuals, but as humans, and will continue to do so far into the future.

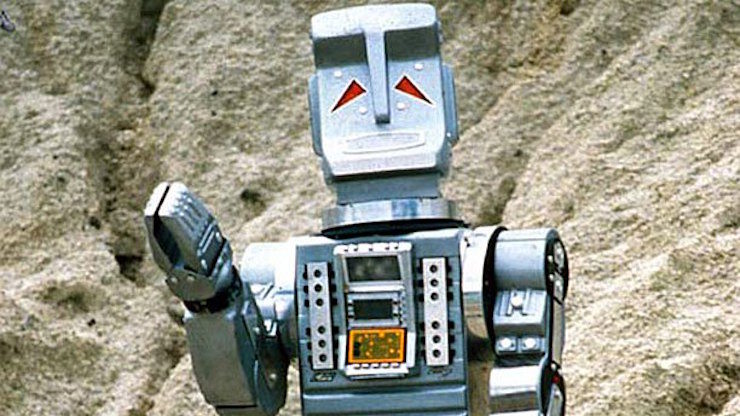

Top image: The Hitch Hikers Guide to the Galaxy (BBC, 1981)

Nicky Drayden is a Systems Analyst who dabbles in prose when she’s not buried in code. She resides in Austin, Texas where being weird is highly encouraged, if not required. Her debut novel The Prey of Gods, forthcoming from Harper Voyager this summer, is set in a futuristic South Africa brimming with demigods, robots, and hallucinogenic hijinks. Catch her on twitter @nickydrayden.

Marina J. Lostetter’s original short fiction has appeared in venues such as Lightspeed, InterGalactic Medicine Show, and Flash Fiction Online. Originally from Oregon, she now lives in Arkansas with her husband, Alex. Her novel, Noumenon, is an epic space adventure starring an empathetic AI, alien megastructures, and generations upon generations of clones. Marina tweets as @MarinaLostetter.