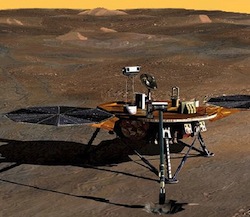

Not long ago, Wil Wheaton mentioned that Jonathan Coulton “needs to write a sad song from Mars Phoenix’s POV about finishing its mission and going to sleep.” In addition to thinking this is a great idea, I instantly thought of the rover pushing valiantly to a final stop, alone in the Martian terrain. I became even sadder, after reading this, a sort of obituary for Phoenix. I wondered if he knew how much we down here admired the effort.

Hmm. See what I did there? I assigned heroic, tragic character traits, gender and morals to a machine. And it feels perfectly normal to do so. While the anthropomorphosis stands out to me, here, because it’s a machine that in no way appears human and is on another planet, the truth is I anthropomorphosize all the time. We extend our humanity to the things we make. Or, we make things as extensions of our humanity.

I’ve read stories of soldiers in Afghanistan and Iraq becoming very distraught over the damage or destruction of robots designed to seek out or dismantle explosives. The soldiers’ emotions go beyond concern over equipment. They care about their fallen comrades, fellow soldiers who save lives without question. I’ve never been in combat, nor do I claim to have more than anecdotal understanding of a soldier’s emotional needs. But I think that in a dangerous situation like that, it’s perfectly reasonable to honor whatever is reliable and keeps you safe, be it a person or a machine.

When I think of what makes humans unusual among animals, I think it isn’t intelligence or thumbs or souls. I think it’s our propensity for thinking symbolically, for assigning meaning. That said, other animals may also do this, but to assume so may in itself be anthropomorphic thinking, so I’m not sure. We readily, and constantly, place meanings upon phenomena that transcend the literal function or physical reality of the phenomena. This is why we have baby dolls, and writing, and games, and governments, and icons and eulogies for robots. Or eulogies, period. It’s why we stop at stop signs and cry at the movies.

On one hand, you could say this places us permanently in a state of fiction, ignorant of the Real with a capital Lacanian R. But on the other hand, without symbolic thinking, we have instinct alone. Given that human beings are not the fastest or strongest animals, we’re born entirely dependent, and our senses are kinda so-so, we are incredibly vulnerable without myriad social structures, all of which are made of a mixture of instinct and symbol.

On one hand, you could say this places us permanently in a state of fiction, ignorant of the Real with a capital Lacanian R. But on the other hand, without symbolic thinking, we have instinct alone. Given that human beings are not the fastest or strongest animals, we’re born entirely dependent, and our senses are kinda so-so, we are incredibly vulnerable without myriad social structures, all of which are made of a mixture of instinct and symbol.

In Moral Machines by Wendell Wallach and Collin Allen, the authors investigate robotics and artificial intelligence from a philosophical and moral perspective. How do we teach morality to machines? What morality do we teach? Once a machine can think ethically, should it be considered legally and morally equal to other sentient beings? Questions of how we teach a machine to think ethically are, of course, framed entirely by wondering how human beings assign morality. The science involved may be new but the questions are old, asked in folktales and religion, and by speculative fiction authors from ETA Hoffmann to Paulo Bacigalupi and countless others in between. The questions remain as yet unanswered except in theory.

I won’t get into the science of artificial intelligence as I don’t understand it and won’t pretend I do. But what I wondered as I read that book, and revisited with Wil Wheaton’s comment, is why we want machines with personalities, imagined or programmed. Granted, a big part of why we’d want robots to reason is purely functional. They’d be, theoretically, a greater boon to their creators if they make their own decisions. But beyond the purely functional, I think we want them to have personalities and imaginations and drives and thoughts and morals and ethics because we want a justification for anthropomorphic beliefs.

Part of it may be simply that human beings want to make human beings. Could the desire for artificial intelligence and androids and all that be a symbolic manifestation of the biological drive to reproduce? I think that, given the slightest chance, we’d treat robots as people even if we know they aren’t.

Children are both aware and unaware that dolls are not alive. They are perfectly cognizant that Bonky the teddy bear is not sentient or biological, and just as perfectly content treating it like a living friend. It may be easy to dismiss it as only pretending—at least by those who find pretending easy to dismiss—but to some extent the symbolic humanity of dolls is no more or less pretend than what the soldier feels for the Packbot or Talon. Is the soldier pretending? If an offering of food is made to a religious icon, is this pretending? The person offering may be totally aware that the statue is not alive, and still give it fruit, with all sincerity. We are symbolic beings and we want our symbols to be real.

Children are both aware and unaware that dolls are not alive. They are perfectly cognizant that Bonky the teddy bear is not sentient or biological, and just as perfectly content treating it like a living friend. It may be easy to dismiss it as only pretending—at least by those who find pretending easy to dismiss—but to some extent the symbolic humanity of dolls is no more or less pretend than what the soldier feels for the Packbot or Talon. Is the soldier pretending? If an offering of food is made to a religious icon, is this pretending? The person offering may be totally aware that the statue is not alive, and still give it fruit, with all sincerity. We are symbolic beings and we want our symbols to be real.

Now let’s imagine that there’s a moral robot. How long would it take before there were decorated robot soldiers, robot movie stars, artificial priests, teachers, spouses, parents, best friends and children? How long before we can legally will our estate to a robot? I suspect that, faced with a machine that can actually think, our anthropomorphic tendencies would go ape, so to speak, and very quickly we’d be thinking of robots as equal or superior to biological humans. More human than human… fleeing the Cylon tyranny… I for one would welcome our robot overlords, etc.

And so, dear readers communicating with me through symbols via machines, what do you think?

Jason Henninger dreams of electric…never mind.